← Back to Blogs

Understanding Embeddings: The Hidden Power Behind Language Models

Byamasu Patrick Paul

Oct 27, 2024 · 9 min read

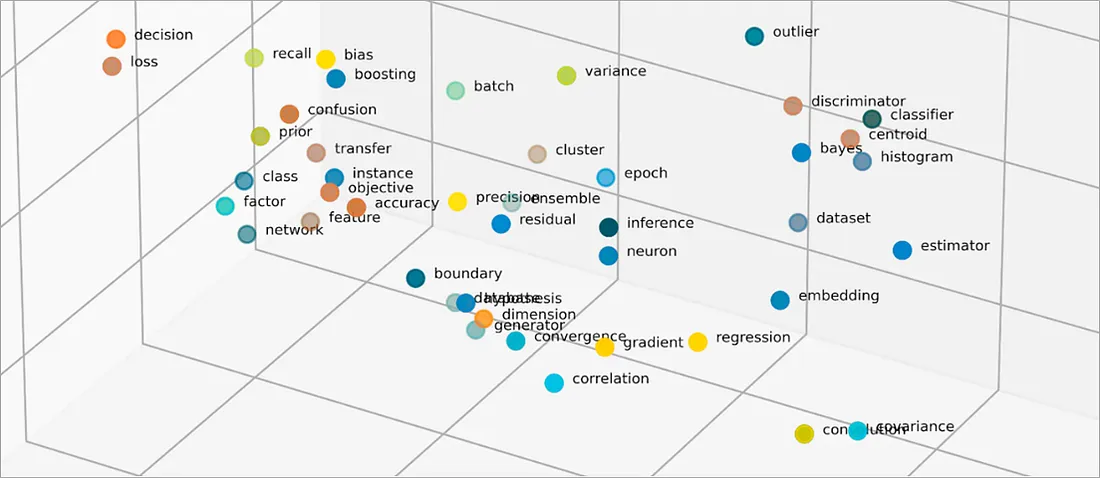

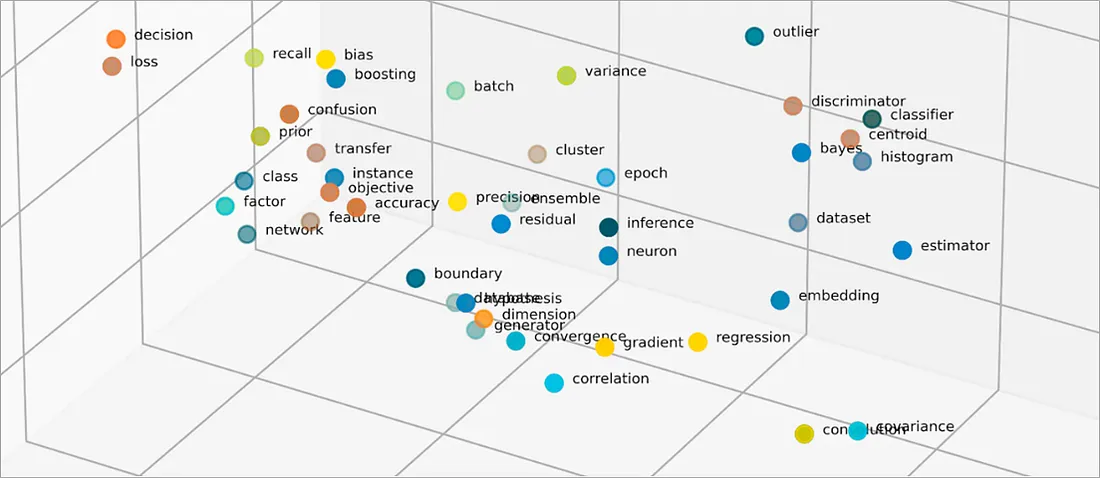

Large Language Models like GPT, Claude, and Gemini rely on embeddings — dense vector representations of words — to generate accurate and context-aware responses. This post explores the history of embeddings from early methods like N-grams and One-Hot Encoding to the breakthrough of Word2Vec, and explains why embeddings are key to enabling AI tools to understand and process language effectively.

Full content coming soon...